Machine learning-based fashions that may autonomously generate numerous forms of content material have turn into more and more superior over the previous few years. These frameworks have opened new potentialities for filmmaking and for compiling datasets to coach robotics algorithms.

Whereas some current fashions can generate reasonable or creative photos based mostly on textual content descriptions, growing AI that may generate movies of shifting human figures based mostly on human directions has to this point proved tougher. In a paper pre-published on the server arXiv and offered at The IEEE/CVF Convention on Pc Imaginative and prescient and Sample Recognition 2024, researchers at Beijing Institute of Expertise, BIGAI, and Peking University introduce a promising new framework that may successfully sort out this process.

“Early experiments in our earlier work, HUMANIZE, indicated {that a} two-stage framework may improve language-guided human motion era in 3D scenes, by decomposing the duty into scene grounding and conditional movement era,” Yixin Zhu, co-author of the paper, informed Tech Xplore.

“Some works in robotics have also demonstrated the positive impact of affordance on the model’s generalization ability, which inspires us to employ scene affordance as an intermediate representation for this complex task.”

The brand new framework launched by Zhu and his colleagues builds on a generative mannequin they launched just a few years in the past, known as HUMANIZE. The researchers got down to enhance this mannequin’s means to generalize effectively throughout new issues, as an example creating reasonable motions in response to the immediate “lie down on the floor,” after studying to successfully generate a “lie down on the bed” movement.

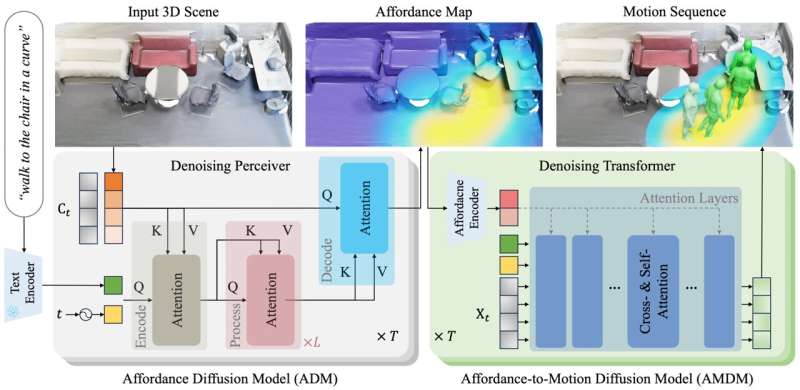

“Our method unfolds in two stages: an Affordance Diffusion Model (ADM) for affordance map prediction and an Affordance-to-Motion Diffusion Model (AMDM) for generating human motion from the description and pre-produced affordance,” Siyuan Huang, co-author of the paper, defined.

“By utilizing affordance maps derived from the distance field between human skeleton joints and scene surfaces, our model effectively links 3D scene grounding and conditional motion generation inherent in this task.”

The staff’s new framework has numerous notable benefits over beforehand launched approaches for language-guided human movement era. First, the representations it depends on clearly delineate the area related to a consumer’s descriptions/prompts. This improves its 3D grounding capabilities, permitting it to create convincing motions with restricted coaching knowledge.

“The maps utilized by our model also offer a deep understanding of the geometric interplay between scenes and motions, aiding its generalization across diverse scene geometries,” Wei Liang, co-author of the paper, mentioned. “The key contribution of our work lies in leveraging explicit scene affordance representation to facilitate language-guided human motion generation in 3D scenes.”

This examine by Zhu and his colleagues demonstrates the potential of conditional movement era fashions that combine scene affordances and representations. The staff hopes that their mannequin and its underlying strategy will spark innovation inside the generative AI analysis group.

The brand new mannequin they developed may quickly be perfected additional and utilized to numerous real-world issues. For example, it might be used to supply reasonable animated movies utilizing AI or to generate reasonable artificial coaching knowledge for robotics purposes.

“Our future research will focus on addressing data scarcity through improved collection and annotation strategies for human-scene interaction data,” Zhu added. “We will also enhance the inference efficiency of our diffusion model to bolster its practical applicability.”

Extra data:

Zan Wang et al, Transfer as You Say, Work together as You Can: Language-guided Human Movement Technology with Scene Affordance, arXiv (2024). DOI: 10.48550/arxiv.2403.18036

© 2024 Science X Community

Quotation:

A brand new framework to generate human motions from language prompts (2024, April 23)

retrieved 26 April 2024

from https://techxplore.com/information/2024-04-framework-generate-human-motions-language.html

This doc is topic to copyright. Other than any truthful dealing for the aim of personal examine or analysis, no

half could also be reproduced with out the written permission. The content material is supplied for data functions solely.

Click Here To Join Our Telegram Channel

Source link

You probably have any issues or complaints relating to this text, please tell us and the article will likely be eliminated quickly.