Caltech neuroscientists are making promising progress towards displaying {that a} machine generally known as a mind–machine interface (BMI), which they developed to implant into the brains of sufferers who’ve misplaced the power to talk, may in the future assist all such sufferers talk by merely pondering and never talking or miming.

In 2022, the group reported that their BMI had been efficiently implanted and utilized by a affected person to speak unstated phrases. Now, reporting within the journal Nature Human Behaviour, the scientists have proven that the BMI has labored efficiently in a second human affected person.

“We are very enthusiastic about these new findings,” says Richard Andersen, the James G. Boswell Professor of Neuroscience and director and management chair of the Tianqiao and Chrissy Chen Mind–Machine Interface Middle at Caltech, who described the sooner analysis in a current public lecture at Caltech. “We reproduced the results in a second individual, which means that this is not dependent on the particulars of one person’s brain or where exactly their implant landed. This is indeed more likely to hold up in the larger population.”

BMIs are being developed and examined to assist sufferers in quite a few methods. For instance, some work has centered on growing BMIs that may management robotic arms or fingers. Different teams have had success at predicting individuals’ speech by analyzing brain signals recorded from motor areas when a participant whispered or mimed phrases.

However predicting what any individual is pondering—detecting their inner dialogue—is way more tough, because it doesn’t contain any motion, explains Sarah Wandelt, Ph.D., lead writer on the brand new paper, who’s now a neural engineer on the Feinstein Institutes for Medical Research in Manhasset, New York.

The brand new analysis is essentially the most correct but at predicting inner phrases. On this case, mind indicators have been recorded from single neurons in a mind space referred to as the supramarginal gyrus situated within the posterior parietal cortex (PPC). The researchers had present in a previous study that this mind space represents spoken phrases.

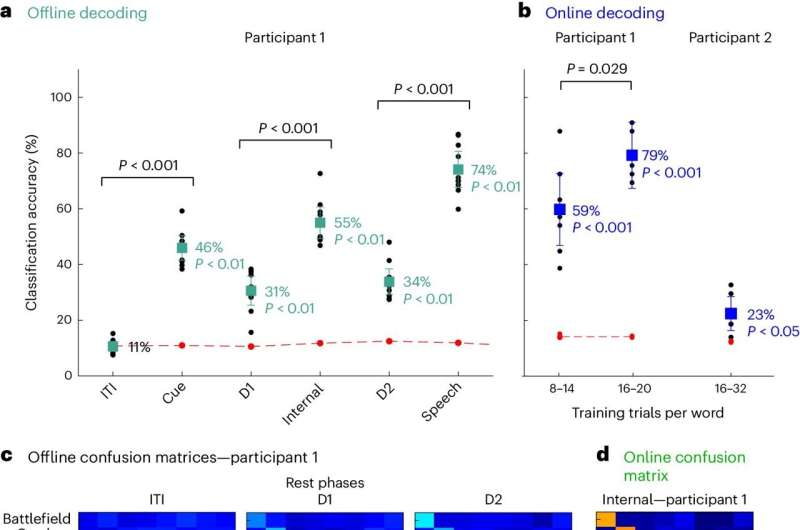

Within the present examine, the researchers first educated the BMI machine to acknowledge the mind patterns produced when sure phrases have been spoken internally, or thought, by two tetraplegic individuals. This coaching interval took solely about quarter-hour. The researchers then flashed a phrase on a display and requested the participant to “say” the phrase internally. The outcomes confirmed that the BMI algorithms have been capable of predict the eight phrases examined, together with two nonsensical phrases, with a mean of 79% and 23% accuracy for the 2 individuals, respectively.

“Since we were able to find these signals in this particular brain region, the PPC, in a second participant, we can now be sure that this area contains these speech signals,” says David Bjanes, a postdoctoral scholar analysis affiliate in biology and biological engineering and an writer of the brand new paper. “The PPC encodes a large variety of different task variables. You could imagine that some words could be tied to other variables in the brain for one person. The likelihood of that being true for two people is much, much lower.”

The work continues to be preliminary however may assist sufferers with mind accidents, paralysis, or ailments, akin to amyotrophic lateral sclerosis (ALS), that have an effect on speech. “Neurological disorders can lead to complete paralysis of voluntary muscles, resulting in patients being unable to speak or move, but they are still able to think and reason. For that population, an internal speech BMI would be incredibly helpful,” Wandelt says.

The researchers level out that the BMIs can’t be used to learn folks’s minds; the machine would should be educated in every individual’s mind individually, they usually solely work when an individual focuses on the actual phrase.

Extra authors on the paper, “Illustration of inner speech by single neurons in human supramarginal gyrus,” embrace Kelsie Pejsa, a lab and medical research supervisor at Caltech, and Brian Lee and Charles Liu, each visiting associates in biology and organic engineering from the Keck Faculty of Medication of USC. Bjanes and Liu are additionally affiliated with the Rancho Los Amigos Nationwide Rehabilitation Middle in Downey, California.

Extra data:

Sarah Okay. Wandelt et al, Illustration of inner speech by single neurons in human supramarginal gyrus, Nature Human Behaviour (2024). DOI: 10.1038/s41562-024-01867-y

Quotation:

Mind-machine interface machine predicts inner speech in second affected person (2024, May 15)

retrieved 15 May 2024

from https://techxplore.com/information/2024-05-brain-machine-interface-device-internal.html

This doc is topic to copyright. Other than any truthful dealing for the aim of personal examine or analysis, no

half could also be reproduced with out the written permission. The content material is offered for data functions solely.

Click Here To Join Our Telegram Channel

Source link

You probably have any considerations or complaints concerning this text, please tell us and the article can be eliminated quickly.