Lately, the staff led by Professor Xu Chengzhong and Assistant Professor Li Zhenning from the University of Macau’s State Key Laboratory of Web of Issues for Good Metropolis unveiled the Context-Conscious Visible Grounding Mannequin (CAVG).

This mannequin stands as the primary Visible Grounding autonomous driving mannequin to combine pure language processing with large language models. They revealed their research in Communications in Transportation Research.

Amidst the burgeoning curiosity in autonomous driving know-how, business leaders in each the automotive and tech sectors have demonstrated to the general public the capabilities of driverless automobiles that may navigate safely round obstacles and deal with emergent conditions.

But, there’s a cautious perspective among the many public in direction of entrusting full management to AI methods. This underscores the significance of growing a system that permits passengers to subject voice instructions to manage the car. Such an endeavor intersects two important domains: laptop imaginative and prescient and pure language processing (NLP).

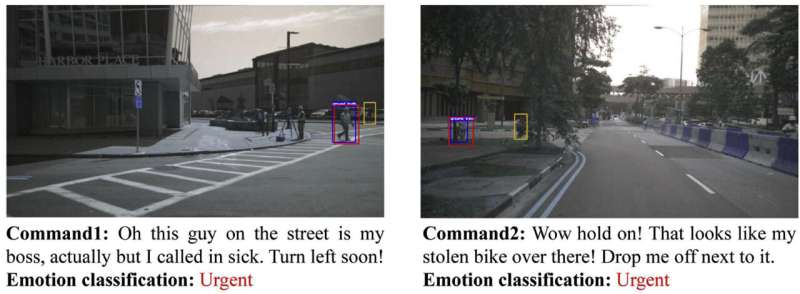

A pivotal analysis problem lies in using cross-modal algorithms to forge a strong hyperlink between intricate verbal directions and real-world contexts, thereby empowering the driving system to understand passengers’ intents and intelligently choose amongst various objectives.

In response to this problem, Thierry Deruyttere and colleagues inaugurated the Talk2Car problem in 2019. This competitors duties researchers with pinpointing probably the most semantically correct areas in front-view photos from real-world site visitors situations, based mostly on supplied textual descriptions.

Owing to the swift development of Giant Language Fashions (LLMs), the potential for linguistic interplay with autonomous automobiles has turn out to be a actuality. The article initially frames the problem of aligning textual directions with visible scenes as a mapping job, necessitating the conversion of textual descriptions into vectors that precisely correspond to probably the most appropriate subregions amongst potential candidates.

To deal with this, it introduces the CAVG mannequin, underpinned by a cross-modal consideration mechanism. Drawing on the Two-Stage Strategies framework, CAVG employs the CenterNet mannequin for delineating quite a few candidate areas inside photos, subsequently extracting regional characteristic vectors for every. The mannequin is structured round an Encoder-Decoder framework, comprising encoders for Textual content, Emotion, Imaginative and prescient, and Context, alongside a Cross-Modal encoder and a Multimodal decoder.

To adeptly navigate the complexity of contextual semantics and human emotional nuances, the article leverages GPT-4V, integrating a novel multi-head cross-modal consideration mechanism and a Area-Particular Dynamics (RSD) layer. This layer is instrumental in modulating consideration and deciphering cross-modal inputs, thereby facilitating the identification of the area that the majority intently aligns with the given directions from amongst all candidates.

Moreover, in pursuit of evaluating the mannequin’s generalizability, the research devised particular testing environments that pose extra complexities: low-visibility nighttime settings, city situations characterised by dense site visitors and complicated object interactions, environments with ambiguous directions, and situations that includes considerably decreased visibility. These situations have been designed to accentuate the problem of correct predictions.

Based on the findings, the proposed mannequin establishes new benchmarks on the Talk2Car dataset, demonstrating exceptional effectivity by attaining spectacular outcomes with solely half of the information for each CAVG (50%) and CAVG (75%) configurations, and exhibiting superior efficiency throughout numerous specialised problem datasets.

Future endeavors in analysis are poised to delve into advancing the precision of integrating textual instructions with visible knowledge in autonomous navigation, whereas additionally harnessing the potential of enormous language fashions to behave as subtle aides in autonomous driving applied sciences.

The discourse will enterprise into incorporating an expanded array of information modalities, together with Fowl’s Eye View (BEV) imagery and trajectory knowledge amongst others. This method goals to forge complete deep studying methods able to synthesizing and leveraging multifaceted modal data, thereby considerably elevating the efficacy and efficiency of the fashions in query.

Extra data:

Haicheng Liao et al, GPT-4 enhanced multimodal grounding for autonomous driving: Leveraging cross-modal consideration with giant language fashions, Communications in Transportation Research (2024). DOI: 10.1016/j.commtr.2023.100116

Offered by

Tsinghua University Press

Quotation:

Voice on the wheel: Examine introduces an encoder-decoder framework for AI methods (2024, April 29)

retrieved 29 April 2024

from https://techxplore.com/information/2024-04-voice-wheel-encoder-decoder-framework.html

This doc is topic to copyright. Other than any truthful dealing for the aim of personal research or analysis, no

half could also be reproduced with out the written permission. The content material is supplied for data functions solely.

Click Here To Join Our Telegram Channel

Source link

You probably have any considerations or complaints concerning this text, please tell us and the article shall be eliminated quickly.